The Hidden Danger of Weak Signals. How False Positive Tuning Creates False Negatives

TL;DR

- SOCs tune out noisy alerts to reduce false positives, but this often creates dangerous false negatives

- Weak signals get suppressed, giving attackers months of undetected access

- The real problem is the lack of scalable investigation capacity

- AI-driven investigation can preserve full visibility while eliminating manual workload

Every SOC analyst knows the feeling. You arrive Monday morning to find a ton of alerts waiting in the queue. Most are false positives: the same noisy authentication failures, benign process executions, vendor scanning activity you've seen a thousand times before, and so on.

So you do what any reasonable security team would do: you tune by adjusting rules and adding exceptions.

And in doing so, you may have just opened the door to your next breach.

The False Positive Obsession

The cybersecurity industry has a false positive problem, and we often talk about it. Studies confirm that up to 53% of alerts investigated by analysts turn out to be benign.

Alert fatigue is real, burnout is rampant, and the pressure to "clean up the dashboard" is intense.

But while we've been laser-focused on eliminating false positives, we've created an equally dangerous problem that gets far less attention: false negatives.

The problem with false negatives is that they are invisible until your incident response team is picking through the rubble of a breach that's been running for months.

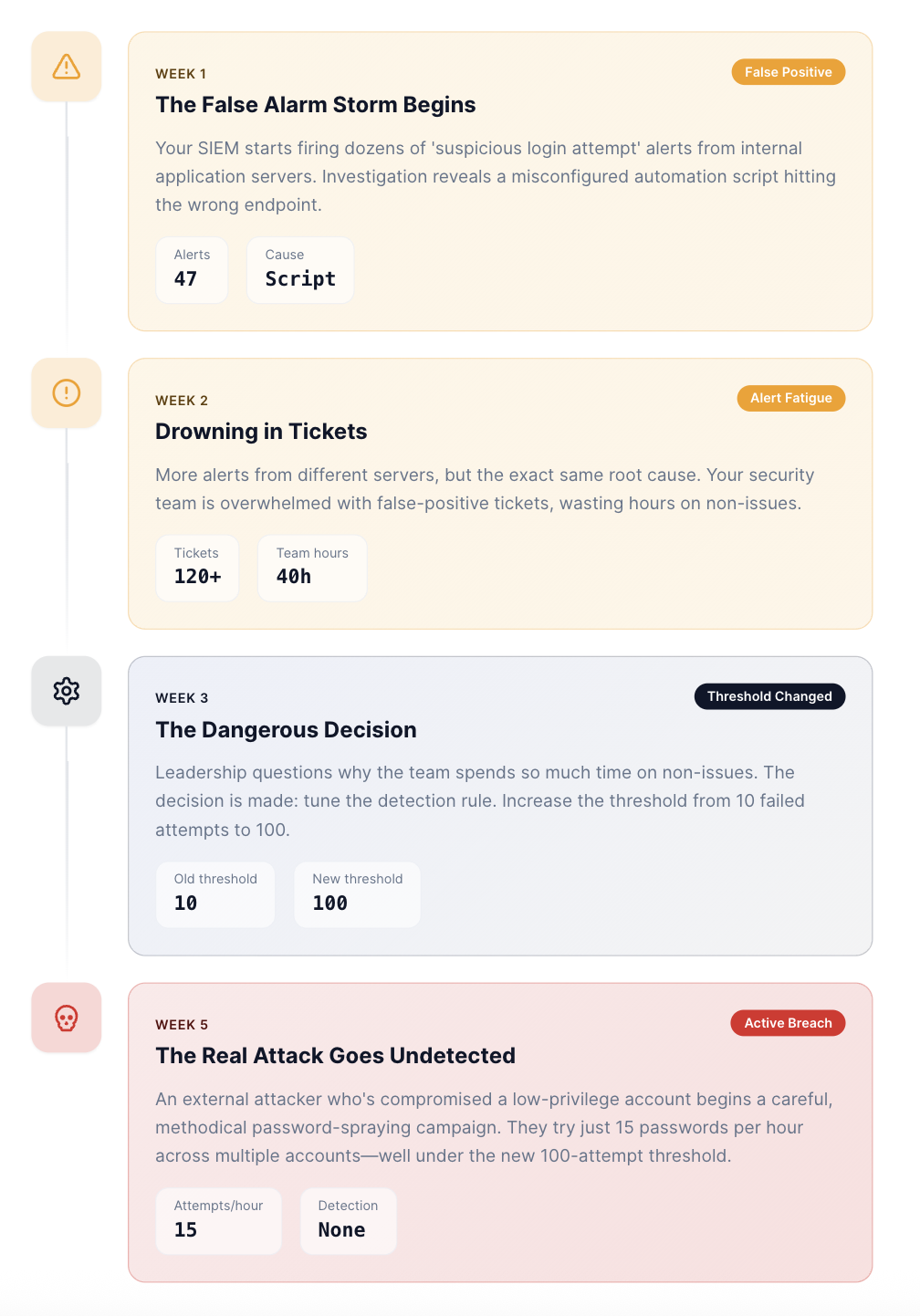

Consider this scenario that plays out in SOCs every single day:

Your detection rule doesn't fire, and the attacker eventually succeeds. Six months later, you discover 400GB of customer data was stolen.

Why Do You Need to Care About Weak Signals?

Advanced persistent threats don't announce themselves with obvious indicators. Modern attackers understand detection engineering better than most defenders.

According to Mandiant's M-Trends 2024 report, the global median dwell time for intrusions is 10 days, and that's only for breaches that get detected. The ones that leverage detection blind spots? They persist much longer.

Those weak signals your team suppressed often are:

- Reconnaissance activity designed to map your environment without triggering volume-based alerts

- Credential stuffing at rates carefully calibrated to stay below your thresholds

- Living-off-the-land techniques that generate subtle anomalies rather than obvious malicious behavior

- Initial access attempts that fail repeatedly but eventually succeed after enough tries

When you tune away these signals, you're eliminating visibility.

To Tune or Not to Tune?

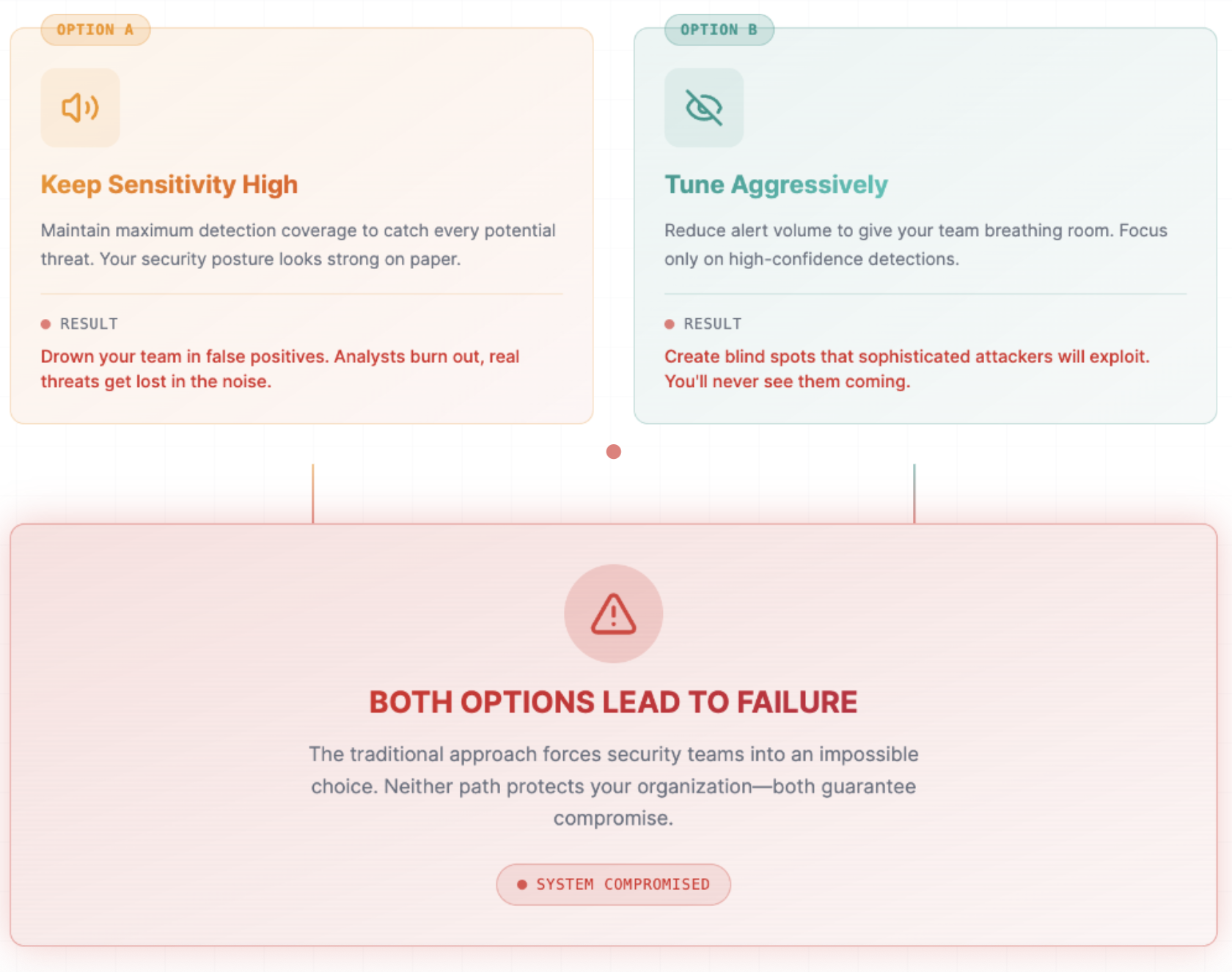

Here's the dilemma facing every SOC leader: you need to maintain high-fidelity detection to catch sophisticated threats, but you don't have the analyst capacity to investigate every low-confidence alert.

Traditional approaches force you to choose between the two following options:

The fundamental problem is that human-scale investigation cannot keep pace with the scale of machine-scale attack surfaces. You can't investigate 10,000 weak signals manually, and that’s where AI comes in to help.

Achieving Investigation Depth with AI

What if you didn't have to choose? What if you could maintain comprehensive visibility without overwhelming your analysts?

Instead of forcing humans to triage machine-generated alerts, Qevlar AI uses graph-based AI to investigate and enrich every alert, even the weakest signals, without LLM hallucinations.

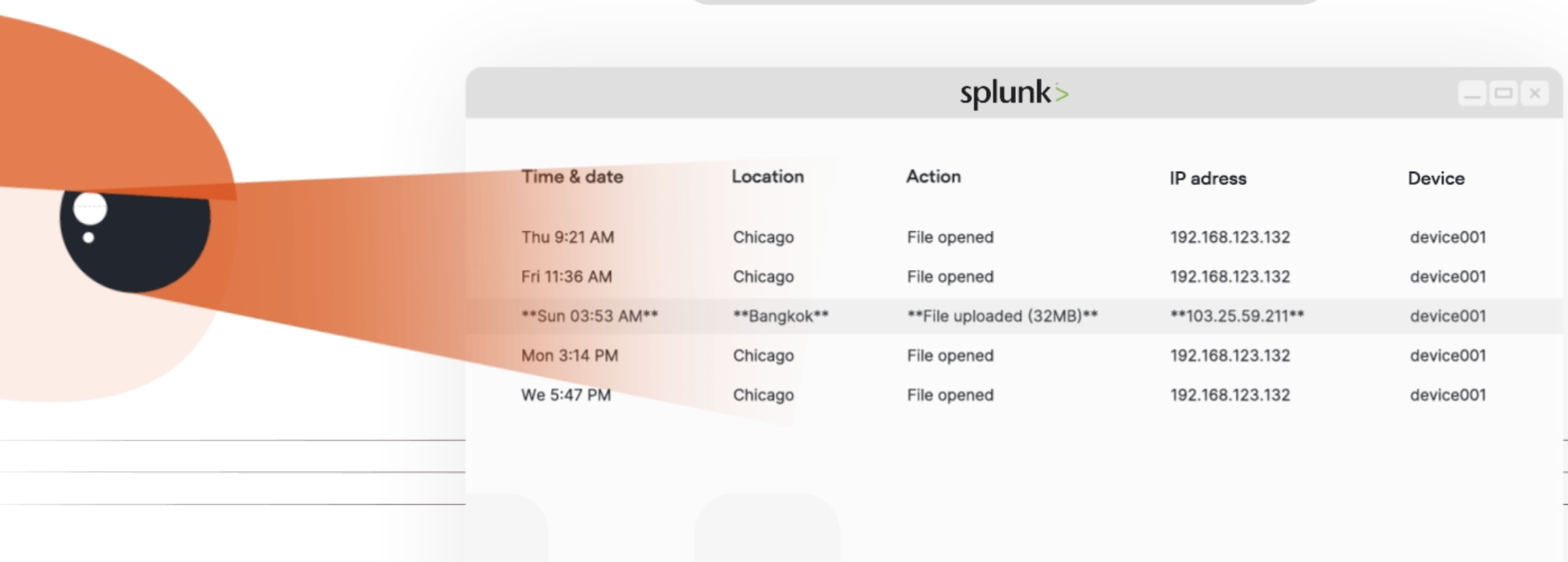

When that slow password spray appears, AI doesn't just see 15 failed logins. It sees 15 failures across 8 accounts, from an IP address with no prior legitimate access, during off-hours, using authentication patterns inconsistent with your organization's standards.

That misconfigured automation script? AI recognizes the pattern, validates it against known legitimate activity, documents the finding, and closes the alert with a full audit trail.

And it works in any other scenario, as Qevlar AI applies the same level of contextual correlation to every alert type. Even in the noisiest log streams, it surfaces the one action that doesn’t belong.

As Daniel Aldstam, Chief Security Officer of GlobalConnect, using Qevlar AI, puts it:

"With Qevlar AI, we've achieved the depth and consistency of investigation we've always aimed for. Every alert, no matter how subtle, is analyzed and documented in minutes. It has given us confidence, clarity, and the ability to scale without compromising quality."

Leading enterprises and MSSPs using Qevlar AI are seeing:

- Automated investigation of 100% of alerts

- Mean time to investigate (MTTI) reduced to under 3 minutes

- Up to 80% of alerts auto-closed

- Zero visibility sacrifice required to manage analyst workload

Your detection rules should be optimized for comprehensive threat coverage. The investigation layer should scale to handle alert volume, so the team can focus on complex cases and proactive measures and develop the top SOC analyst skills needed to face the new era of GenAI threats.

Because somewhere in that noise you tuned away, there can be a barely detectable signal with the most danger.