AI SOC solutions market: what has changed in 2025 and how to choose the best AI solution for your SOC

TL;DR

The AI SOC market has evolved faster in 2025 than in the previous three years combined.

What used to be a category defined by “AI copilots” and triage assistants has now evolved into a crowded landscape of agentic triage, investigations, and attempts at autonomous response.

- Every major vendor, including Microsoft, CrowdStrike, Palo Alto, and Google, now ships some form of AI agentic automation.

- More than 70 standalone AI SOC platforms exist today (including Qevlar AI), and at least 26 received funding in 2025, showing strong market validation.

- Large vendors like Microsoft and CrowdStrike keep their AI tightly coupled to their own stacks, often with limited visibility into the reasoning behind their decisions. This makes outcomes harder to trust.

- Standalone AI SOC platforms take a different path: transparent investigations, vendor-agnostic integration, and more consistent results.

Despite the noise, the gap between what different solutions actually deliver is still wide.

This guide breaks down what really changed in 2025 and how to evaluate the tools behind the hype.

What Is a Standalone AI SOC Platform?

Think of it as an AI-powered analyst that sits above your entire stack, pulls in alerts from different sources, and runs full investigations end to end.

AI SOC Platforms can:

- Enrich alerts with threat intelligence and business context

- Analyze observables

- Query logs

- Correlate activity

- Explain every step of its reasoning so analysts can review, trust, and act on the results.

A good example is Qevlar AI, one of the most widely adopted standalone AI SOC platforms among MSSPs and Fortune Global 500 companies.

How AI SOC Platforms Differ From Each Other

On the surface, many AI SOC tools may look similar. In reality, they vary widely in:

1. Who is the platform built for?

Some tools are built for large enterprise SOCs, others focus on MSSPs, and a few can support both at scale.

2. How deep does the enrichment go?

This greatly affects investigation quality. Ask whether the tool:

- Downloads and sandboxes files

- Uses business-specific and historical context

- Includes out-of-the-box threat intelligence

- Performs vision and semantic analysis for emails

3. Does the platform require a training or tuning period?

Some platforms work out of the box. Others need weeks of prompting, tuning, or customer-specific setup.

4. Does it support automated containment actions?

Only a few can handle containment safely across different environments.

5. How does it handle LLM stability?

The most mature platforms handle LLM randomness through orchestration frameworks.

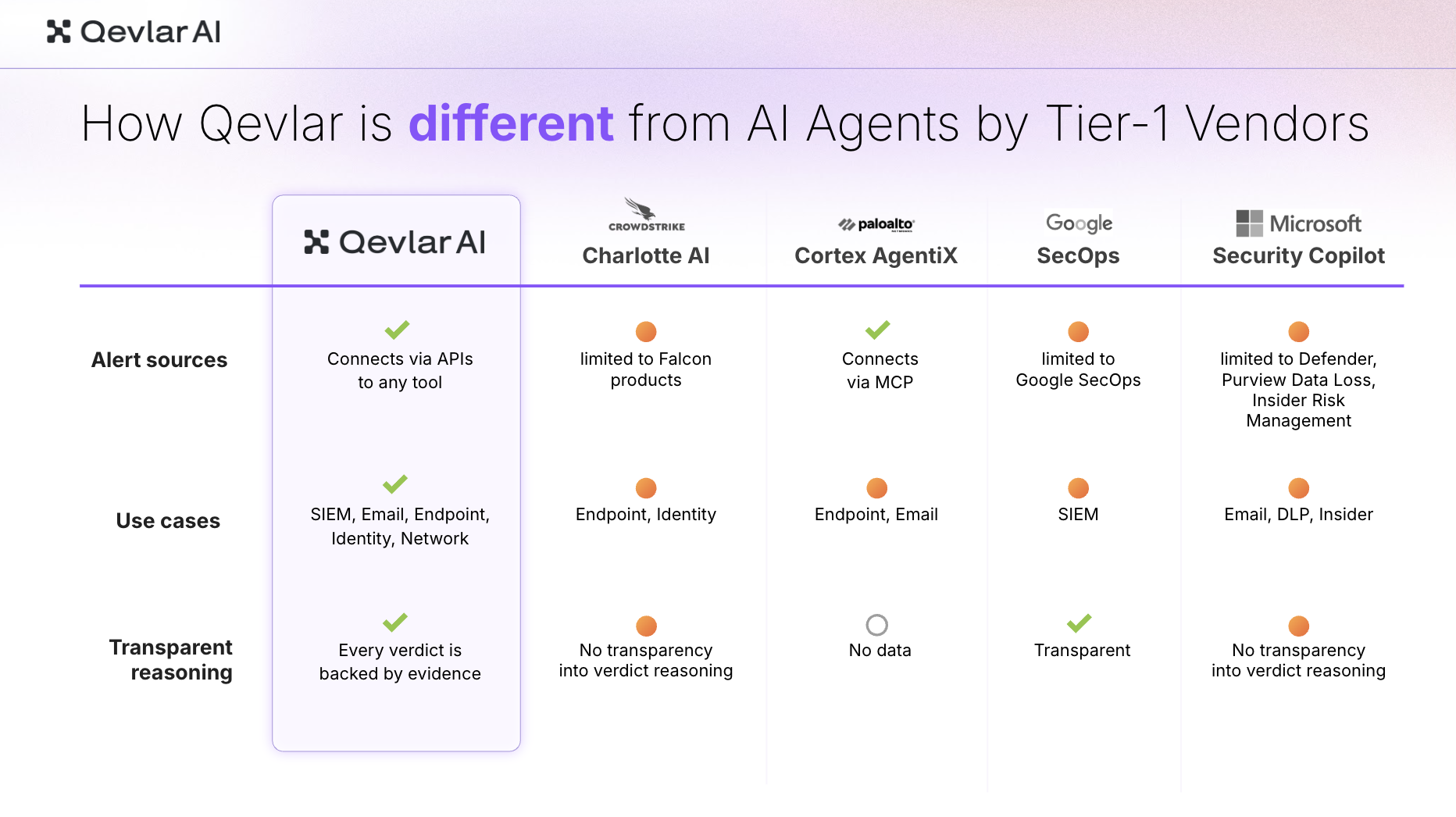

Standalone AI SOC Platforms vs Agentic Features From Tier-1 Vendors

Let’s look at how the agentic capabilities of CrowdStrike, Palo Alto, Google, and Microsoft compare with a standalone platform like Qevlar AI, one of the most adopted AI SOC agents among top MSSPs and Fortune Global 500 companies.

In a nutshell:

- Standalone AI SOC platforms integrate with whichever detection tools you use.

- That makes it possible to cover attack surfaces across SIEM, endpoint, identity, cloud, and email, rather than being locked into one vendor’s suite.

- Transparency in AI reasoning varies widely across vendors. Dedicated AI SOC platforms usually reveal every step of the investigation, from observables to log queries.

- Many vendors promise high accuracy, yet solutions that rely solely on an LLM often produce inconsistent or random results. The more mature tools combine LLMs with orchestration layers that maintain stable, predictable reasoning.

Vendor Lock-In

CrowdStrike, Microsoft, and Google restrict their agents to their own products. For customers already deep in those ecosystems, this feels convenient. For everyone else, especially MSSPs and hybrid SOCs, it becomes a constraint.

Vendor-agnostic AI SOC platforms like Qevlar AI take the opposite route. They integrate through APIs with any SIEM, SOAR, XDR, EDR, email, or identity system.

Investigation Use Cases

Security leaders expect AI to investigate alerts across the entire stack: endpoint, identity, network, cloud, and email.

Tier-1 vendor tools still cover only narrow slices:

- Microsoft focuses on phishing and insider-risk workflows

- Palo Alto and CrowdStrike center on endpoint and identity

- Google’s agents are still in an early stage and tied to SIEM logs.

Transparency and Consistency of LLMs

One of the biggest challenges in 2025 is LLM stability.

According to Qevlar’s recent study, even simple alerts produce inconsistent investigation paths.

- Consistency rarely exceeds 75%

- Complex alerts can generate nearly 100 unique investigation paths

- Identical alerts may receive different severity ratings or classifications

CrowdStrike’s Charlotte AI and Microsoft Security Copilot generate summaries without revealing how they reached their conclusions, making it nearly impossible for analysts to build trust in the results.

Platforms that combine LLMs with orchestration frameworks deliver far more reproducible and explainable results.

How to Choose The Best AI Solution For Your SOC

With so many AI tools in the market and much variation in maturity, here’s what matters most in 2026:

1. Can it cover your real alert landscape?

Does it only handle phishing or endpoint alerts, or can it investigate SIEM, identity, cloud, and network, too?

2. Does it work with the tools you already have?

Is it locked into one vendor’s ecosystem, or can it ingest alerts from any SIEM, XDR, SOAR, EDR, email, or identity platform?

3. How transparent is the AI’s reasoning?

Can analysts see every investigation step, or does the tool only provide a high-level summary with no explanation?

4. Are the investigation results consistent?

Does the platform rely on a single LLM, or does it use an orchestration layer that ensures stable, repeatable outcomes?

5. How fast is the time to value?

Does it require a long learning period, tuning, and prompting, or can it start delivering results as soon as it receives the first alert?

6. How deep does the enrichment go, and does it adapt to my context?

Does it:

- Use threat intelligence out of the box?

- Sandbox files?

- Analyze URLs and emails with semantic or vision models?

- Learn from my analysts’ feedback, business context, and historical data?

7. For MSSPs: Can it scale across all clients?

Does it support multi-tenancy, role-based separation, and data isolation?

Planning your 2026 AI strategy? See Qevlar running in your SOC with same-day deployment. Book a free demo